“What is the number one thing people should do more of when using machine learning?” Experts over the years always reply with – “I wish people understood how crucial it is to repeatedly train and test with representative data and were more skeptical of the results.”

Why is it so important to have training and test data?

We will cover the principles of training and test data and why all experts do it rigorously – and why they want everyone else to do it too in this article.

When you build a machine learning model for prediction, you need to provide some data for it to base its predictions on. For example, if you are building a model to predict which sports team will likely to win a tournament, you will provide it with data of teams and winners from past tournaments. The data that you use to build this model is called the training dataset.

The training dataset forms your machine learning model’s core knowledge. It will not be able to predict beyond the knowledge that it infers from the training dataset so your training data needs to be representative of the overall picture you observe from your data. For example, if your model is built to predict traffic from Monday to Friday and your training data consists of past traffic data from the weekends, then it will most likely not predict very well.

How do you know if you are training your model properly and using the right training dataset?

The answer is complicated, but the simplest and the most direct way to find out whether you have trained your machine learning model well is to test it. This is where your test dataset comes in. The test dataset needs to be independent of your training data – in other words, you should not use the same data that you have used to train your model to test the model. This is cheating in machine learning terms!

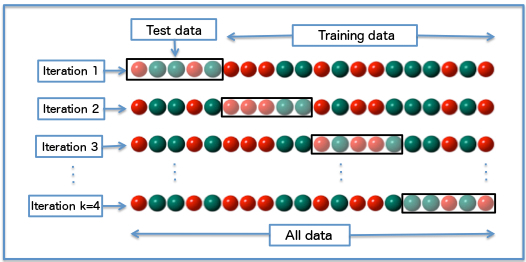

One common way to ensure that you are training and testing properly is k-fold cross-validation. Cross-validation is a technique that partitions your sample of data into training and test sets. Experts often default to 10-fold cross-validation, which partitions your data into ten partitions, then use one of the partitions as test data while using the other nine partitions as training data.

The average accuracy of the model predictions is then used to evaluate how good your built model is.

If you can afford the time and resources, a further extension from 10-fold cross-validation is 10×10-fold cross-validation. In 10×10-fold cross-validation, you repeat 10-fold cross-validation ten times, but randomising the dataset in between each 10-fold.

In conclusion, training and testing is important for evaluating your machine learning models. Experts say you need to be more skeptical of results and make sure you repeatedly train and test your model. One common way to begin evaluating is to use cross-validation.

Bringing yourself or your entire development team up to speed on fundamental machine learning principles is important. Make sure you are not missing out the crucial details that make-or-break your machine learning initiatives. If you are having trouble kicking off your machine learning training, we can help! Contact us or visit our course catalog to learn more about our interactive short courses.